Degenerate Feedback Loops In Machine Learning

In machine learning, feedback refers to the evaluation of how good or bad the generated prediction is. It helps in enhancing the machine learning system iteratively.

In a machine learning system, receiving accurate feedback for a prediction is either impossible or convoluted. For instance, consider an object detection use case, the failure to detect an object by the model requires manual effort to understand why the model failed to detect an object, and for which data point it failed.

However, there are some use cases like recommender systems where we receive feedback in a few seconds based on the click of the recommendation. This type of feedback is referred to as natural labels.

In this blog post, we’ll discuss degenerate feedback loops. It is a phenomenon where the prediction made by the model, influences the feedback, which in turn influences the next iteration of the model. In simple terms, the system’s output (prediction) becomes inputs to the system’s training in the next cycle, leading to unintended consequences.

A degenerate feedback loop is quite common in use cases where we receive natural labels like recommender systems or ads click-through rate.

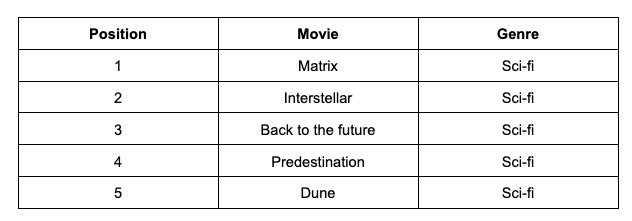

Let’s understand this better, consider a movie recommendation system. For a user, it recommends sci-fi movies based on the watch history, the movies are recommended based on the recommendation score (rank). Often the movies that are shown on the top are clicked more than the movies at the bottom.

Since the movie Matrix is on top, it gets clicked often, thus enhancing its recommendation score. Hence, it results in the increasing gap between Matrix and Interstellar on the rank metric, making the Matrix to be on top more often. As this continues, the movie Matrix has a much higher rank than Interstellar.

Degenerate feedback loops are one of the reasons why popular movies, books, and songs keep getting popular, making it difficult for new items to reach the top. It happens a lot in real-time systems and is termed exposure bias, popularity bias, filter bubbles, or echo chambers.

How to detect degenerate feedback loops?

When a system is offline, degenerate feedback loops are difficult to detect, unlike when the system is online. Degenerate feedback loops are generated by user feedback we won’t receive feedback unless the system is online. However, for the task of the recommender system, it is possible to detect degenerate feedback offline using the Popularity Diversity metric.

An item’s popularity can be measured based on how many times it has been interacted with(e.g. Seen, liked, watched) in the past. The popularity metric follows a long-tail distribution, where a small number of items interacted with a lot, while most items rarely interacted at all.

Next, aggregate diversity is one of the metrics which measures the diversity of items across the recommendation lists of all users. Increasing aggregate diversity is important because it leads to a more even distribution of items in the recommendation lists which prevents the long-tail problem.

Another approach the detect degenerate feedback loops is to measure the hit rate against popularity. First divided items into buckets based on their popularity — e.g., bucket 1 consists of items that have been interacted with less than 100 times, bucket 2 consists of items that have been interacted with more than 100 times but less than 1,000 times, etc. Then they measured the prediction accuracy of a recommender system for each of these buckets. If a recommender system is much better at recommending popular items than recommending less popular items, it likely suffers from popularity bias.

Once a system is in production and we’ll notice that its predictions become more homogeneous over time, it likely suffers from degenerate feedback loops.

How to correct degenerate feedback loops?

Two common approaches to overcoming degenerate feedback loops are Randomization and Positional Features.

Randomization

We’ve seen that in production over a period of time, the recommender system starts giving homogenous predictions. By introducing randomization to the predictions, we can reduce homogeneity.

In the case of recommender systems, instead of showing the users only the items that the system ranks highly for them, we show users random items and use their feedback to determine the true quality of these items.

Randomization is one approach that is followed by TikTok. Each new video is randomly assigned an initial pool of traffic (which can be up to hundreds of impressions). This pool of traffic is used to evaluate each video’s unbiased quality to determine whether it should be moved to a bigger pool of traffic or be marked as irrelevant.

Though randomization improved the diversity score, it might harm the user experience if the recommendations are completely random, resulting in losing interest in the product. But there are other intelligent approaches with acceptable prediction loss.

Positional Feature

We’ve discussed earlier, how the position of the recommended item can affect the way the item can get selected by the user.

Consider the movie recommender system example, where each time we recommend five movies to users. We realized that the top-recommended movie is much more likely to be clicked on compared to the other four movies. But we are unsure, whether our model is exceptionally good at picking the top movie, or whether the users click on any movie as long as it’s recommended on the top.

If the position in which a prediction is shown affects its feedback in any way, we might want to encode the position information using positional features. Positional features can be numerical (e.g., positions are 1, 2, 3,…) or Boolean (e.g., whether a prediction is shown in the first position or not).

During training, we add “whether a movie is recommended first” as a feature to the training data. This feature allows our model to learn how much being a top recommendation influences how likely a movie is clicked on.

During inference, we want to predict whether a user will click on a movie regardless of where the movie is recommended, so we might want to set the 1st Position feature to False. Then we look at the model’s predictions for various movies for each user and can choose the order in which to show each movie.

This is a naive example because doing this alone might not be enough to combat degenerate feedback loops. A more sophisticated approach would be to use two different models. The first model predicts the probability that the user will see and consider a recommendation taking into account the position at which that recommendation will be shown. The second model then predicts the probability that the user will click on the item given that they saw and considered it. The second model doesn’t concern positions at all.

Reference: Designing Machine Learning Systems by Chip Huyen

...

Feedback is welcomed 💬